– continued from part 2.

My first attempt at creating a 100 Hz real-time Linux kernel loop did not work as expected. After getting some good pointers from the linux-rt-users mailing list, and doing some further investigations myself, I decided to reimplement the loop using usleep_range() (further described here). This function basically just sleeps for a specified time (given a minimum and a maximum time to sleep), and thus does not need to have callbacks or similar specified. It should therefore be straight-forward to just create a new thread calling usleep_range() after each iteration, and then schedule it with the real-time scheduler.

So, attempt2:

#include <linux/interrupt.h>

#include <linux/err.h>

#include <linux/irq.h>

#include <linux/clk.h>

#include <linux/list.h>

#include <linux/kthread.h>

#include <linux/sched.h>

#include <linux/rtmutex.h>

#include <linux/hrtimer.h>

#include <linux/delay.h>

static struct task_struct *thread_10ms;

/**

* Thread for 10ms polling of bus devices

*/

static int bus_rt_timer_thread(void *arg) {

ktime_t startTime = ktime_get();

while(!kthread_should_stop()) {

pollBusHardware();

s64 timeTaken_us = ktime_us_delta(ktime_get(), startTime);

if(timeTaken_us < 9900) {

usleep_range(9900 - timeTaken_us, 10100 - timeTaken_us);

}

startTime = ktime_get();

}

printk(KERN_INFO "RT Thread exited\n");

return 0;

}

int __init bus_timer_interrupt_init(void) {

struct sched_param param = { .sched_priority = MAX_RT_PRIO - 1 };

thread_10ms = kthread_create(bus_rt_timer_thread, NULL, "bus_10ms");

if (IS_ERR(thread_10ms)) {

printk(KERN_ERR "Failed to create RT thread\n");

return -ESRCH;

}

sched_setscheduler(thread_10ms, SCHED_FIFO, ¶m);

wake_up_process(thread_10ms);

printk(KERN_INFO "RT Timer thread installed.\n");

return 0;

}

void __exit bus_timer_interrupt_exit(void) {

kthread_stop(thread_10ms);

printk(KERN_INFO "RT Thread removed.\n");

}

bus_timer_interrupt_init() does the same as before, but the created bus_rt_timer_thread() is now kept running. Instead of just spawning a timer and returning, it calls pollBusHardware(), then sleeps for somewhere between 9.9 ms and 10.1 ms subtracted by the time spent in pollBusHardware(), and starts again.

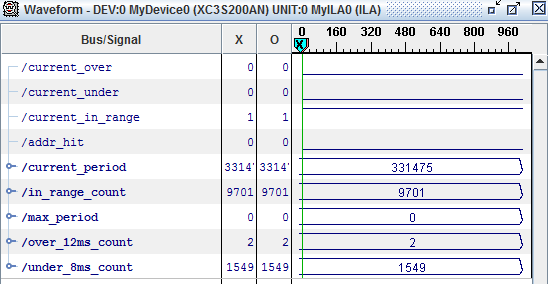

Giving this the same stress treatment as attempt1, I got the following after 30 seconds: (initial counts are ~6500, 2 and 1549 for in_range_count, over_12ms_count and under_8ms_count respectively)

This is much better. All periods are now in the 8-12 ms range (note that max_period is not set, as this only happens if over_12ms_count is triggered), and this doesn’t change, even when letting it run for an additional ~30 minutes.

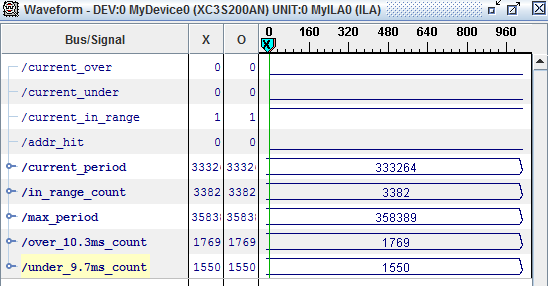

To get a better look at the apparently much-improved performance, I lowered the FPGA thresholds so that they now measure from 9.7 ms (323000 clockcycles) to 10.3 ms (343000 clockcycles). The results after 30 seconds under load: (initial counts are 44, 1729 and 1550 for in_range_count, over_10.3ms_count and under_9.7ms_count respectively)

There have been a few periods (40) above the threshold, but looking at max_period, the maximum period has been just below 10.9 ms. Much, much better than with attempt1, and most probably due to the fact that the loop is now actually running with real-time scheduling. Also, letting it run for an additional ~30 minutes resulted in a maximum period of just below 11 ms. Not perfect, but more than good enough for our use.

Refining it even further

The performance was now acceptable, but I was not completely happy with usleep_range() using relative time-periods (which meant that I had to manually measure the time spent in the loop). There doesn’t seem to be any version of usleep_range() working with absolute time, so I instead had a little dig inside the implementation of the function.

usleep_range() is implemented as follows in kernel/timer.c:

/**

* usleep_range - Drop in replacement for udelay where wakeup is flexible

* @min: Minimum time in usecs to sleep

* @max: Maximum time in usecs to sleep

*/

void usleep_range(unsigned long min, unsigned long max)

{

__set_current_state(TASK_UNINTERRUPTIBLE);

do_usleep_range(min, max);

}

EXPORT_SYMBOL(usleep_range);

And, moving on to do_usleep_range() (in the same file):

static int __sched do_usleep_range(unsigned long min, unsigned long max)

{

ktime_t kmin;

unsigned long delta;

kmin = ktime_set(0, min * NSEC_PER_USEC);

delta = (max - min) * NSEC_PER_USEC;

return schedule_hrtimeout_range(&kmin, delta, HRTIMER_MODE_REL);

}

So in the end, usleep_range() uses schedule_hrtimeout_range() – a function that seems to belong to the High-Resolution timer API, but that I can’t find any description of except for the one I just linked to. In any case though, the function does exactly what I needed – sleep with a delay specified in absolute time.

So, my third and final attempt at a 100 Hz real-time Linux kernel loop:

#include <linux/interrupt.h>

#include <linux/err.h>

#include <linux/irq.h>

#include <linux/clk.h>

#include <linux/list.h>

#include <linux/kthread.h>

#include <linux/sched.h>

#include <linux/rtmutex.h>

#include <linux/hrtimer.h>

#include <linux/delay.h>

static struct task_struct *thread_10ms;

static int bus_rt_timer_thread(void *arg) {

ktime_t timeout = ktime_get();

while(!kthread_should_stop()) {

pollBusHardware();

timeout = ktime_add_us(timeout, 10000);

__set_current_state(TASK_UNINTERRUPTIBLE);

schedule_hrtimeout_range(&timeout, 100, HRTIMER_MODE_ABS);

}

return 0;

}

int __init bus_timer_interrupt_init(void) {

struct sched_param param = { .sched_priority = MAX_RT_PRIO - 1 };

thread_10ms = kthread_create(bus_rt_timer_thread, NULL, "bus_10ms");

if (IS_ERR(thread_10ms)) {

printk(KERN_ERR "RT Failed to create RT thread\n");

return -ESRCH;

}

sched_setscheduler(thread_10ms, SCHED_FIFO, ¶m);

wake_up_process(thread_10ms);

return 0;

}

void __exit bus_timer_interrupt_exit(void) {

kthread_stop(thread_10ms);

printk(KERN_INFO "RT Thread removed.\n");

}

Since I’m calling schedule_hrtimer_range() with HRTIMER_MODE_ABS, I can now just forward my timeout by 10 ms by using ktime_add_us(), instead of having to measure the time taken in pollBusHardware(), and then subtract that from the 10 ms delay. I’ve chosen a range delta of 100 us, so I should be getting periods between 10 ms and 10.1 ms.

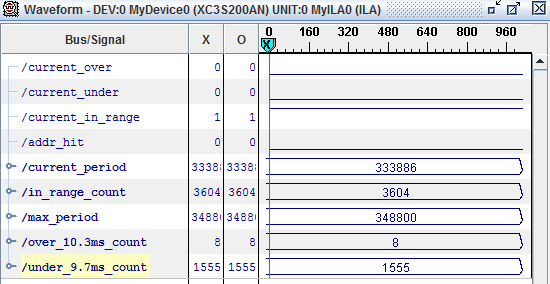

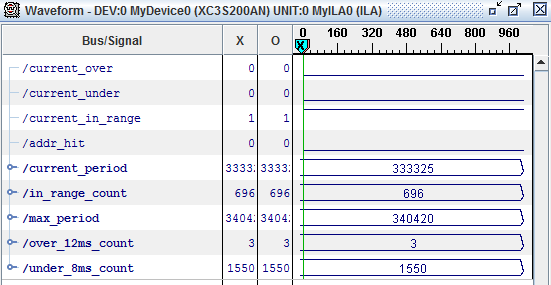

Let’s see: (initial counts are ~500, 4 and 1550 for in_range_count, over_10.3ms_count and under_9.7ms_count respectively)

This is even better than before. There have only been a handful of periods above or below the desired range, and the maximum period is below 10.6 ms – and only slightly higher (but still not above 10.7 ms) even after 30 minutes. In addition to this, the code is much cleaner than in attempt2, since there is no longer any need for doing any “manual” time measurement.

Conclusion

The initial implementation worked just fine as long as the system was unloaded, but as soon as the stress test was started, the performance collapsed and periods were taking up to six times longer than intended. Looking further into the code, I managed to improve the implementation down to a much more respectable maximum of 6% longer duration.

The main things I’ve learned from this:

- Real-time software needs to be stress-tested to make sure that it actually *is* real-time

- The Linux kernel contains a lot of functionality that is only scarcely documented – and only if you know where to look

- Mailing lists can be really, really helpful – I’d never have made it this far without the help of the people on the linux-rt-users mailing list – so thanks!

All in all I hope these blog posts will be helpful for others that need to accomplish a similar task, be it about implementing real-time kernel loops, or testing real-time functionality.

Any comments or questions are of course more than welcome.

Hi …awesome post ….like it.

I created workqueue using ( create_workqueue) and changed its priority to SCHED_FF. This thread will sleep if there is no work. I am not sure whether this approach is will give the maximum thruput ???? what is your thoughts here ?. I chose this approach to save cpu cycles if there is no work.

Thanks for your post, it helped me a lot.

pollBusHardware() ?

where is this function codes ?

pollBusHardware() is just the function used to poll the custom bus hardware – its contents are not relevant for the example.